Alerting is an essential aspect of infrastructure and application monitoring. With alerts, you can quickly know when something is amiss and respond immediately — often even before the performance issue impacts your user experience in a production environment. In this article, we’ll take a look at how to configure Prometheus alerts for a Kubernetes environment.

Prometheus Alerting

About Alertmanager

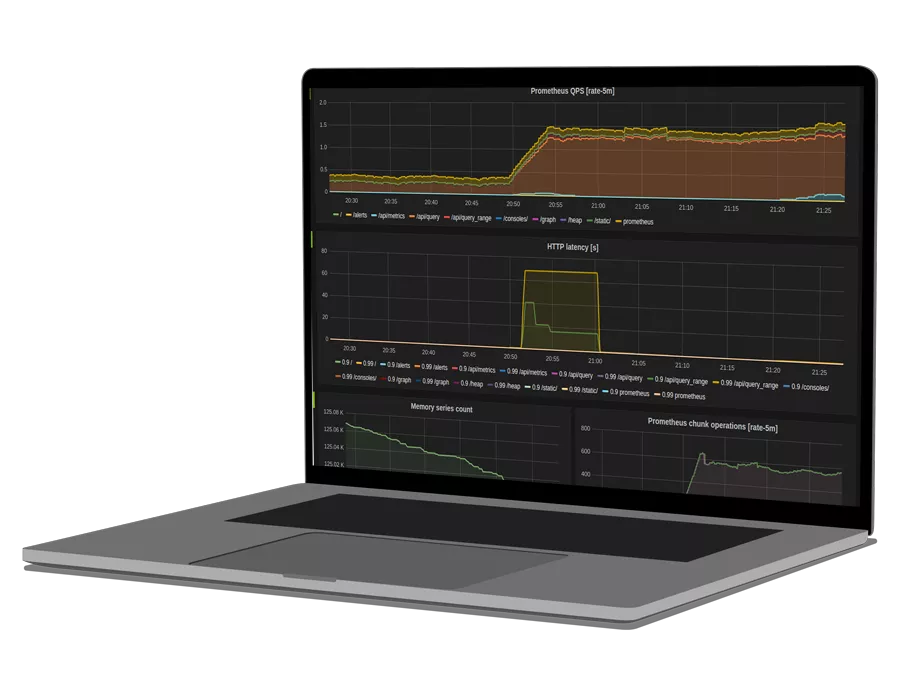

Prometheus alerting is powered by Alertmanager. Prometheus forwards its alerts to Alertmanager for handling any silencing, inhibition, aggregation, or sending of notifications across your platforms or event management systems of choice. Alertmanager also takes care of deduplicating and grouping, which we’ll go over in the following sections.

You can run Alertmanager either inside or outside of your cluster. For high availability, you can also run multiple Alertmanagers, which is strongly recommended for production systems.

Alerting Concepts

There are three important concepts to familiarize yourself with when using Alertmanager to configure alerts:

- Grouping: You can group alerts into categories (e.g., node alerts, pod alerts)

- Inhibition: You can dedupe alerts when similar alerts are firing to avoid spam

- Silencing: You can mute alerts based on labels or regular expressions

Rule File Components

A rule file uses the following basic template:

groups:

- name:

rules:

- alert:

expr:

for:

labels:

annotations:- Groups: A collection of rules that are run sequentially at a regular interval

- Name: Name of group

- Rules: The rules in the group

- Alert: A valid label name

- Expr: The condition required for the alert to trigger

- For: The minimum duration for an alert’s expression to be true (active) before updating to a firing status

- Labels: Any additional labels attached to the alert

- Annotations: A way to communicate actionable or contextual information such as a description or runbook link

Recording Intervals

Recording intervals is a setting that defines how long a threshold must be met before an alert enters a firing state. If the recording interval is too low, you might get notified for small blips in metric changes (known as false positives or noise); if the recording interval is too long, then you may not be able to solve the performance issue in time to minimize damage.

Let’s look at an example comparing two alerting rules, where the recording interval can be found as a value associated to the “for” input:

groups:

- name: ElasticSearch Alerts

rules:

- alert: ElasticSearch Status RED

annotations:

summary: "ElasticSearch Status is RED (cluster {{ $labels.cluster }})"

description: "ES Health is Critical\nCluster Name: {{$externalLabels.cluster}}"

expr: |

elasticsearch_cluster_health_status{color="red"}==1

for: 3m

labels:

team: devops

- alert: ElasticSearch Status YELLOW

annotations:

summary: "ElasticSearch Status is YELLOW (cluster {{ $labels.cluster }})"

description: "ES Health is Critical\nCluster Name: {{$externalLabels.cluster}}"

expr: |

elasticsearch_cluster_health_status{color="yellow"}==1

for: 10m

labels:

team: slack - Elasticsearch Status RED: 3 minutes

- Elasticsearch Status YELLOW: 10 minutes

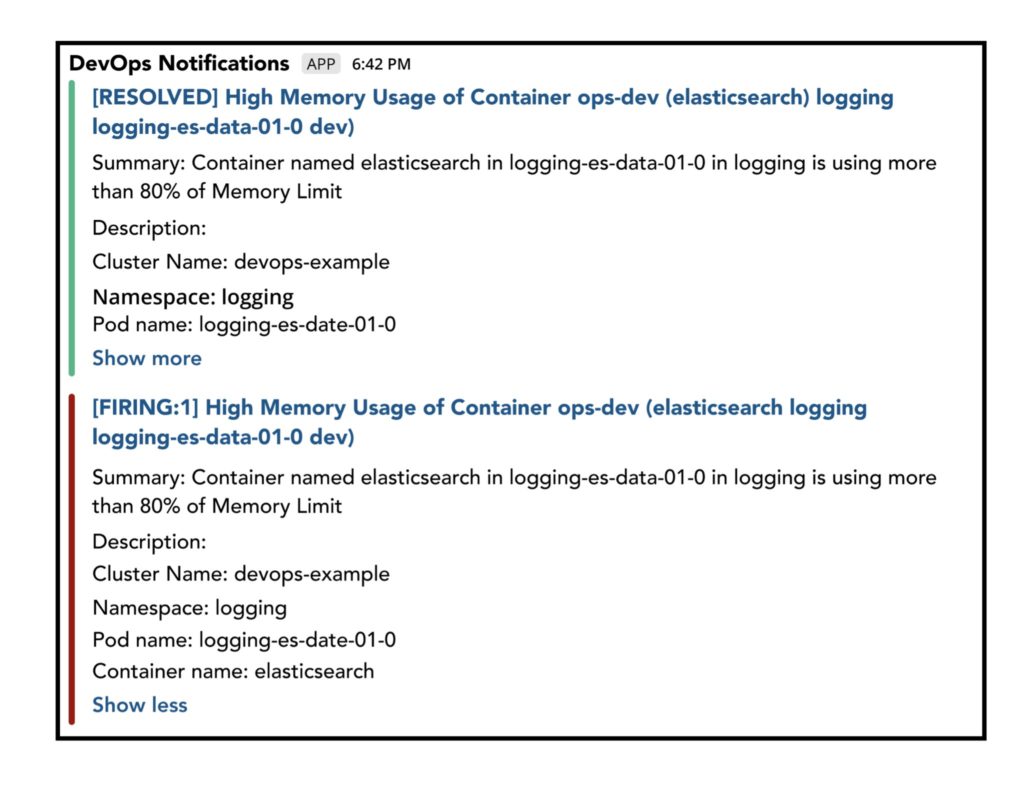

In this example, you can see that a more severe alert has a lower threshold requirement than a warning alert. Later in this article, we show the screenshot of such an alert notification displayed in a Slack channel.

Alert States

There are three main alert states to know:

- Pending: The time elapsed since threshold breach is less than the recording interval

- Firing: The time elapsed since threshold breach is more than the recording interval and alertmanager is delivering notifications

- Resolved: The alert is no longer firing because the threshold is no longer exceeded; you can optionally enable resolution notifications using `[ send_resolved: <boolean> | default = true ]` in your config file

11 Kubernetes Alerts to Know

The following sections are a single configuration file broken down into individual alerts that we recommend setting up. This is not an exhaustive list, and you can always add or remove alerts to best suit your needs.

1. Deployment at 0 Replicas

This alert triggers when your deployment has no replica pods running.

groups:

- name: Deployment groups:

rules:

- alert: Deployment at 0 Replicas

annotations:

summary: Deployment {{$labels.deployment}} is currently having no pods running

description: "\nCluster Name: {{$externalLabels.cluster}}\nNamespace:

{{$labels.namespace}}\nDeployment name: {{$labels.deployment}}\n"

expr: |

sum(kube_deployment_status_replicas{pod_template_hash=""}) by (deployment,namespace) < 1

for: 1m

labels:

team: devops

rules:

- alert: Deployment at 0 Replicas

annotations:

summary: Deployment {{$labels.deployment}} is currently having no pods running

description: "\nCluster Name: {{$externalLabels.cluster}}\nNamespace:

{{$labels.namespace}}\nDeployment name: {{$labels.deployment}}\n"

expr: |

sum(kube_deployment_status_replicas{pod_template_hash=""}) by (deployment,namespace) < 1

for: 1m

labels:

team: devops2. HPA Scaling Limited

This alert triggers when the horizontal pod autoscaler is unable to scale your deployment.

- alert: HPA Scaling Limited

annotations:

summary: HPA named {{$labels.hpa}} in {{$labels.namespace}} namespace has reached scaling limited state

description: "\nCluster Name: {{$externalLabels.cluster}}\nNamespace:

{{$labels.namespace}}\nHPA name: {{$labels.hpa}}\n"

expr: |

(sum(kube_hpa_status_condition{condition="ScalingLimited",status="true"}) by

(hpa,namespace)) == 1

for: 1m

labels:

team: devops3. HPA at Max Capacity

This alert triggers when a horizontal pod autoscaler is running at max capacity.

- alert: HPA at MaxCapacity

annotations:

summary: HPA named {{$labels.hpa}} in {{$labels.namespace}} namespace is running at Max Capacity

description: "\nCluster Name: {{$externalLabels.cluster}}\nNamespace:

{{$labels.namespace}}\nHPA name: {{$labels.hpa}}\n"

expr: |

((sum(kube_hpa_spec_max_replicas) by (hpa,namespace)) -

(sum(kube_hpa_status_current_replicas) by (hpa,namespace))) == 0

for: 1m

labels:

team: devops4. Container Restarted

This alert triggers when a container inside a pod restarted due to either an Error, an OOMKill, or a process completion.

- name: Pods

rules:

- alert: Container restarted

annotations:

summary: Container named {{$labels.container}} in {{$labels.pod}} in {{$labels.namespace}} was restarted

description: "\nCluster Name: {{$externalLabels.cluster}}\nNamespace:

{{$labels.namespace}}\nPod name: {{$labels.pod}}\nContainer name: {{$labels.container}}\n"

expr: |

sum(increase(kube_pod_container_status_restarts_total{namespace!="kube-system",pod_template_hash=""}[1m])) by (pod,namespace,container) > 0

for: 0m

labels:

team: slack5. Too Many Container Restarts

This alert triggers when a pod has gotten into a crashloop state.

- alert: Too many Container restarts

annotations:

summary: Container named {{$labels.container}} in {{$labels.pod}} in {{$labels.namespace}} was restarted

description: "\nCluster Name: {{$externalLabels.cluster}}\nNamespace:

{{$labels.namespace}}\nPod name: {{$labels.pod}}\nContainer name: {{$labels.container}}\n"

expr: |

sum(increase(kube_pod_container_status_restarts_total{namespace!="kube-system",pod_template_hash=""}[15m])) by (pod,namespace,container) > 5

for: 0m

labels:

team: dev6. High Memory Usage of Container

This alert triggers when a pod’s current memory usage is close to the memory Limit assigned to it.

- alert: High Memory Usage of Container

annotations:

summary: Container named {{$labels.container}} in {{$labels.pod}} in {{$labels.namespace}} is using more than 80% of Memory Limit

description: "\nCluster Name: {{$externalLabels.cluster}}\nNamespace:

{{$labels.namespace}}\nPod name: {{$labels.pod}}\nContainer name: {{$labels.container}}\n" expr: |

((( sum(container_memory_working_set_bytes{image!="",container!="POD", namespace!="kube-system"}) by (namespace,container,pod) /

sum(container_spec_memory_limit_bytes{image!="",container!="POD",namespace!="kube-system"}) by (namespace,container,pod) ) * 100 ) < +Inf ) > 80

for: 5m

labels:

team: dev7. High CPU Usage of Container

This alert triggers when a pod’s current CPU usage is close to the memory limit assigned to it.

- alert: High CPU Usage of Container

annotations:

summary: Container named {{$labels.container}} in {{$labels.pod}} in {{$labels.namespace}} is using more than 80% of CPU Limit

description: "\nCluster Name: {{$externalLabels.cluster}}\nNamespace:

{{$labels.namespace}}\nPod name: {{$labels.pod}}\nContainer name: {{$labels.container}}\n" expr: |

((sum(irate(container_cpu_usage_seconds_total{image!="",container!="POD", namespace!="kube-system"}[30s])) by (namespace,container,pod) /

sum(container_spec_cpu_quota{image!="",container!="POD", namespace!="kube-system"} /

container_spec_cpu_period{image!="",container!="POD", namespace!="kube-system"}) by (namespace,container,pod) ) * 100) > 80

for: 5m

labels:

team: dev8. High Persistent Volume Usage

This alert triggers when the persistent volume attached to a pod is nearly filled.

- alert: High Persistent Volume Usage

annotations:

summary: Persistent Volume named {{$labels.persistentvolumeclaim}} in {{$labels.namespace}} is using more than 60% used.

description: "\nCluster Name: {{$externalLabels.cluster}}\nNamespace:

{{$labels.namespace}}\nPVC name: {{$labels.persistentvolumeclaim}}\n"

expr: |

((((sum(kubelet_volume_stats_used_bytes{}) by

(namespace,persistentvolumeclaim)) /

(sum(kubelet_volume_stats_capacity_bytes{}) by

(namespace,persistentvolumeclaim)))*100) < +Inf ) > 60

for: 5m

labels:

team: devops9. High Node Volume Usage

This alert triggers when a Kubernetes node is reporting high memory usage.

- name: Nodes

rules:

- alert: High Node Memory Usage

annotations:

summary: Node {{$labels.kubernetes_io_hostname}} has more than 80% memory used. Plan Capacity

description: "\nCluster Name: {{$externalLabels.cluster}}\nNode:

{{$labels.kubernetes_io_hostname}}\n"

expr: |

(sum (container_memory_working_set_bytes{id="/",container!="POD"}) by

(kubernetes_io_hostname) / sum (machine_memory_bytes{}) by (kubernetes_io_hostname) * 100) > 80

for: 5m

labels:

team: devops10. High Node CPU Usage

This alert triggers when a Kubernetes node is reporting high CPU usage.

- alert: High Node CPU Usage

annotations:

summary: Node {{$labels.kubernetes_io_hostname}} has more than 80% allocatable cpu used. Plan Capacity.

description: "\nCluster Name: {{$externalLabels.cluster}}\nNode:

{{$labels.kubernetes_io_hostname}}\n"

expr: |

(sum(rate(container_cpu_usage_seconds_total{id="/",

container!="POD"}[1m])) by (kubernetes_io_hostname) / sum(machine_cpu_cores) by (kubernetes_io_hostname) * 100) > 80

for: 5m

labels:

team: devops11. High Node Disk Usage

This alert triggers when a Kubernetes node is reporting high disk usage.

- alert: High Node Disk Usage

annotations:

summary: Node {{$labels.kubernetes_io_hostname}} has more than 85% disk used. Plan Capacity.

description: "\nCluster Name: {{$externalLabels.cluster}}\nNode:

{{$labels.kubernetes_io_hostname}}\n"

expr: |

(sum(container_fs_usage_bytes{device=~"^/dev/[sv]d[a-z][1-9]$",id="/",container!="POD"}) by (kubernetes_io_hostname) /

sum(container_fs_limit_bytes{container!="POD",device=~"^/dev/[sv]d[a-z][1-9]$",id="/"}) by (kubernetes_io_hostname)) * 100 > 85

for: 5m

labels:

team: devopsSee how OpsRamp enhances Prometheus in minutes with Prometheus Cortex

How to Configure Alertmanager Endpoints

Prometheus can be made aware of Alertmanager by adding Alertmanager endpoints to the Prometheus configuration file. If you have multiple instances of Alertmanager running for high availability, make sure all Alertmanager endpoints are provided. Note: Do not load balance traffic between Prometheus and multiple Alertmanager endpoints. The Alertmanager implementation expects all alerts to be sent to all Alertmanagers to ensure high availability.

A Prometheus configuration for Alertmanager looks something like this:

alerting:

# We want our alerts to be deduplicated

# from different replicas.

alert_relabel_configs:

- regex: prometheus_replica

action: labeldrop

alertmanagers:

- scheme: http

path_prefix: /

static_configs:

- targets: ['alertmanager:9093']Notification Channels

Alertmanager integrates with a ton of notification providers such as Slack, VictorOps, and Pagerduty. It also has a webhook delivery system which you can integrate with any event management system. The complete list of supported notification channels can be found here.

A typical Alertmanager configuration file may look something like this:

In this configuration file, we have defined two receivers named “devops” and “slack.” Alerts are matched to each receiver based on the team label in the alert metric. In this particular case, the devops receiver delivers alerts to a Slack channel and Opsgenie on-call personnel. The slack receiver only delivers the alert to a Slack channel

global:

resolve_timeout: 5m

slack_api_url: "https://hooks.slack.com/services/"

templates:

- '/etc/alertmanager-templates/*.tmpl'

route:

group_by: ['alertname', 'cluster', 'service']

group_wait: 10s

group_interval: 1m

repeat_interval: 5m

receiver: default

routes:

- match:

team: devops

receiver: devops

continue: true

- match:

team: slack

receiver: slack

continue: true

receivers:

- name: 'default'

- name: 'devops'

opsgenie_configs:

- api_key:

priority: P1

slack_configs:

- channel: '#infra-alerts'

send_resolved: true

icon_url: https://avatars3.githubusercontent.com/u/3380462

text: " \nSummary: {{ .CommonAnnotations.summary }}\nDescription: {{

.CommonAnnotations.description }} "

- name: 'slack'

slack_configs:

- channel: '#infra-alerts'

send_resolved: true

icon_url: https://avatars3.githubusercontent.com/u/3380462

text: " \nSummary: {{ .CommonAnnotations.summary }}\nDescription: {{

.CommonAnnotations.description }} "

Best Practices

Use Labels for Severity and Paging

Alert notification channels are matched using labels. This ensures that you still get notified for each alert (for example, in a Slack channel as a public log) but that only the most important alerts prompt direct paging.

Tune Alert Thresholds

Keeping alert thresholds too low or setting low recording intervals can lead to false positives. These settings should be fine-tuned based on your performance and usage patterns; expect an iterative process to discover what values work best for your infrastructure and application stack.

Mute Alerts During Debugging

Avoid the noise (and panic) alerts can cause while you are debugging by temporarily muting your alerts.

Assign Alerts Directly to Teams

Map your alerts to areas of responsibility definable by roles and teams. This makes ownership more clear and enables your teams to promptly handle any issues that arise. Alert mapping also allows your teams to trust when an alert is relevant to them, reducing the risk of an important alert going ignored.

For more best practices, check out the official Alertmanager documentation.

Limitations

1. Event Correlation

Alertmanager provides one view of your environment and needs to be combined with other monitoring tools separately deployed to watch your full application stack. This can be accomplished using a centralized event correlation engine. For example, you may have a microservice hosted in a Kubernetes container that stops responding. The root cause may reside in your application code, a third-party API, public cloud services, or a database hosted in a private cloud with its own dedicated network and storage systems. A centralized event correlation engine would process events from all of your data sources and help isolate the root cause based on the sequence of event occurrences and the system dependencies.

2. Machine Learning

Alertmanager is a set of robust alerting rules and recording rules; however, it does not support machine learning for learning trends, accounting for seasonality, or detecting anomalies. With hundreds of thousands of ephemeral monitoring endpoints, the use of machine learning (also known as AIOps) should be a required ingredient of any modern monitoring strategy.

3. Alert Management Workflow

Alertmanager provides no way for a user to interact with an alert to acknowledge, prioritize, claim ownership, troubleshoot, and finally resolve the issue. Advanced incident management tools provide the ability to integrate Alertmanager via its API and offer an operational workflow management functionality designed for teamwork.

4. Automation

Alertmanager is most powerful when combined with an automation tool that would accept Prometheus events as input and use them to trigger run-books that would automate the action-taking required to resolve the underlying cause of the problem. For example, all of the simple and repetitive tasks (such as rebooting a system or increasing computing resources) raised from recurring problems (such as a server running out of memory or a hung process) would be taken care of without human interaction so that the operations team can focus on troubleshooting the more complex issues.

See how OpsRamp enhances Prometheus in minutes with Prometheus Cortex

Closing words

Prometheus alerting is a powerful tool that is free and cloud-native. Alertmanager makes it easy to organize and define your alerts; however, it is important to integrate it with other tools used to monitor your application stack by feeding its events into specialized tools that offer event correlation, machine learning, and automation functionality.

Understand the differences between Prometheus and Grafana and learn about an alternative.

You like our article?

Follow our monthly hybrid cloud digest on LinkedIn to receive more free educational content like this.

Powered By Prometheus Cortex

Learn MoreNative support for PromQL across dozens of integrations

Automated event correlation powered by machine learning

Visual workflow builder for automated problem resolution