This article requires some understanding of the Kubernetes inner workings. If you are not familiar with the Kubernetes building blocks, we recommend starting with our article devoted to explaining the Kubernetes architecture.

A hosted Kubernetes service refers to a public cloud service offered by vendors such as Google, Amazon Web Services (AWS), and Microsoft Azure intended to avoid the upfront capital investment required to deploy a Kubernetes cluster in a data center and reduce part of its administrative overhead.

Public cloud providers let customers choose between outsourcing the Kubernetes control plane’s management or outsourcing the control plane plus the worker node’s administration. The control plane contains the orchestration logic while the work nodes host the application workloads.

When a service provider outsources both responsibilities, customers only provision the Kubernetes pods (the smallest deployable unit of Kubernetes comprising one or more containers) and pay for the pods’ usage. In this scenario, the cloud provider manages all underlying Kubernetes cluster resources such as nodes and networks and related functionality such as high availability and autoscaling. This article explains the differences in hosted container services and compares the offerings from the top three public cloud service providers.

The Timeline

Soon after Google donated the Kubernetes project to the Cloud Native Computing Foundation (CNCF) in 2014, Amazon Web Services (AWS) and Google (later joined by Microsoft Azure) began offering hosted container services that grew with time in functionality and convenience. The diagram below shows the introduction timeline of AWS and Google Cloud Engine (GCE) related services.

AWS has been leading the market in container services despite Google creating the Kubernetes technology. AWS launched Elastic Container Services (ECS) in 2015 (not based on Kubernetes) and Elastic Kubernetes Service (EKS) in 2018. AWS was also the first vendor to introduce a “serverless” option (known as AWS Fargate) that outsources the worker nodes in addition to the control plane. Fargate is a “serverless” container service because the provider and not the customer manages the virtual machines forming the cluster nodes. Google Autopilot now offers an almost identical service despite being positioned to the market more as a “managed” hosted service than a “serverless” cloud service with some additional controls over workload provisioning covered later in this article.

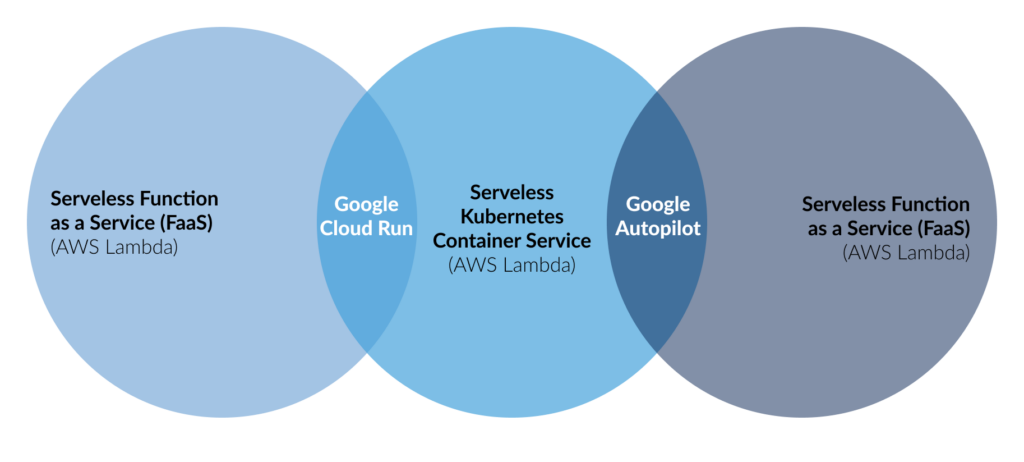

The timeline diagram presented earlier in this article captures the introduction sequence of AWS Lambda, Google Functions, and Google Cloud Run. AWS Lambda was the first cloud offering in 2014 that started a serverless paradigm, also known as Function as a Service (FaaS), whereby developers provide the software code without worrying about any infrastructure components required to run the code. A key advantage of FaaS is that cloud providers charge for usage only when a function actively runs (and doesn’t charge any fees while it’s idle). Google Cloud Run, launched in 2019, blurred the lines between a hosted container service and FaaS. Google Cloud Run offers a serverless functionality similar to AWS Lambda but exposes the container used to provision the run-time environment. This aspect is where the lines blur between FaaS and a container service (more on this later in the article).

Hosted Control Plane

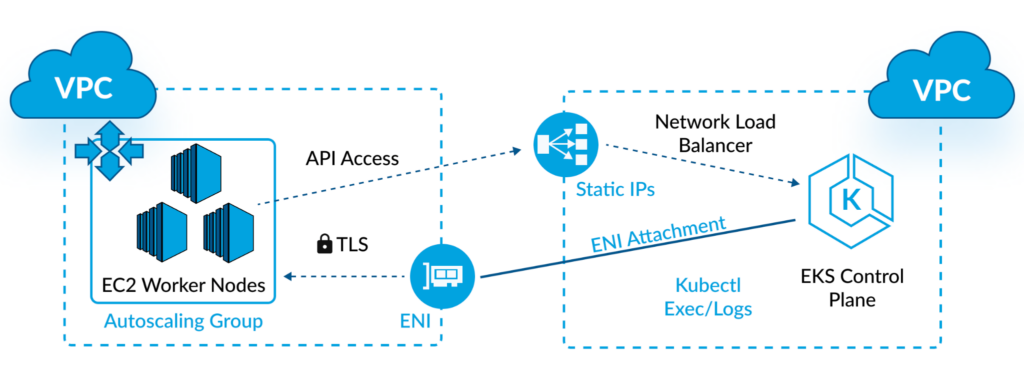

As part of a hosted control plane offering and using AWS as an example, the service provider operates, scales, and upgrades the software running the control plane without any downtime so customers can focus on the worker nodes that host the application workloads. For security reasons and administrative control, the control plane and the worker nodes are separated into two Virtual Private Clouds (VPCs) connected by Elastic Network Interfaces (ENI) that act as configurable gatekeepers allowing access to the Kubernetes APIs running on the nodes. The users can use a command-line interface (CLI) or a console hosted in the control plane to launch Kubernetes pods. The users are also responsible for adding and removing worker nodes which they can automate via Kubernetes cluster autoscaler and AWS autoscaling groups (ASG).

The users pay a flat fee for launching a new Kubernetes cluster and separately pay for the usage of the provisioned worker nodes (standard virtual machines). Google Kubernetes Engine (GKE) and Azure Kubernetes Service (AKS) have a similar service architecture and pricing model.

Serverless Container Service

A serverless container service abstracts the control plane and the worker nodes, leaving the users with control over provisioning Kubernetes pods (a grouping of containers), paying only for pod usage (and not for the worker nodes). The service provider adds and removes nodes to support the computing resources necessary to run the pods and also upgrades and patches the infrastructure software (operating system, network, and storage device drivers) used as nodes.

AWS was first to launch this type of serverless container service and allows some additional flexibility such as using spot instances (purchased on the AWS spot market at up to 90% discount) and mixing self-managed node groups (grouping of a subset of nodes within a cluster) with AWS-managed node groups. Azure offers AKS ACI as a similar offering to AWS Fargate for EKS.

Managed Cluster

Google found a gap in user demand with the existing cloud container services and filled it with Autopilot. This offering is similar to a serverless container service but allows additional control over the Kubernetes API endpoints, including daemonsets, jobs, and admission controllers used for provisioning different types of application workloads. This approach lets users benefit from outsourcing the worker node management and the pricing model based on pod usage but retain additional control over the Kubernetes cluster.

The table below summarizes various aspects of the hosted container services described in this article.

| Workload Resource | Hosted Control-plane | Serverless Container Services | Managed Cluster |

|---|---|---|---|

| Example | Google GKE, AWS EKS, Azure AKS | AWS Fargate, Azure ACI | Google GKE Autopilot |

| Control-plane upgrade process | Manual | Automated | Automated, or relatively easy |

| Node Group Upgrade Process | Manual | Not applicable | Automated (GKE) |

| Metrics | Control-plane metrics | Pod metrics available | Control-plane metrics |

| Security | It depends partly on the security of the cloud providers and partly on the user | Depends on the security policies of the cloud provider | Depends on the security policies of the cloud provider |

| Configuration Capability | More configurable than a serverless container service | The least configurable option with a fully managed service | A bit more control relative to a serverless container service |

Conclusion

Leading cloud service providers have launched new offerings in recent years that increasingly outsource Kubernetes cluster management tasks. With each offering, customers spend more time managing their applications instead of managing the infrastructure. The proper selection depends on the required level of control and the application’s architecture. For example, an application migrating from a data center to the public cloud with a high demand for IOPS would require more control and therefore be a better match for a service that only outsources the control plane. On the other hand, a new and primarily stateless application based on microservices without any unique network or storage needs would be a natural candidate for serverless container service.

You like our article?

You like our article?

Follow our monthly hybrid cloud digest on LinkedIn to receive more free educational content like this.

Consolidated IT Operations for Hybrid Cloud

Learn MoreA single platform for on-premises and multi-cloud environments

Automated event correlation powered by machine learning

Visual workflow builder for automated problem resolution