For the last decade, Virtual Machines (VMs) have been the backbone for software applications deployed to a cloud environment and still offer a great deal of trusted maturity. However, when it comes to application portability and delivery, containerization has overtaken virtualization.

Today, it’s common for organizations to operate thousands of short-lived containers, each configured according to different workload requirements. These containers must be provisioned, connected to a network, secured, replicated, and eventually terminated. Although one person can manually configure dozens of containers, a large team must operate thousands of containers across a large enterprise environment.

As this management-scaling problem became more apparent for enterprise-level container use cases, an opportunity arose for orchestration tools like Kubernetes. In this article, we’ll take a look at the challenges of managing containerized applications without Kubernetes, especially in a public cloud — but first, let’s talk a bit more about Kubernetes.

What is Kubernetes?

Kubernetes is a container orchestrator platform that we introduce in this article and cover in-depth in our future articles. Commonly referred to as K8s (the number 8 represents the eight letters between “K” and “s”), Kubernetes is an open-source project and the industry’s de-facto standard for automating deployment, scaling, and administration of containerized applications.

Scaling Requires Task Automation

Managing containers is challenging without automating resource allocation, load-balancing, and security enforcement. The list below highlights some of the tasks that require automation:

- Provisioning containers based on predefined images (OS, dependencies, libraries)

- Balancing incoming traffic across groups of similar containers

- Adjusting the number of containers based on workload demand (horizontal scaling)

- Allocating the right amount of CPU and memory for each container (vertical scaling)

- Configuring network ports to enable secure communication between containers

- Connecting and removing storage systems attached to containers

- Restarting containers if they fail

4 Container Management Challenges Made Easier With an Orchestration Tool

Below, we dig deeper into four of the many tasks requiring automation in container administration.

1. Deploying

Deploying an application requires many steps. Here are a few examples:

- Installing device drivers that manage the server hardware modules

- Installing the latest operating system patches

- Installing software libraries required by the application to run

- Ensuring communication with needed services such as a database

- Making sure the IP address is registered with the domain name service

- Installing virus and vulnerability scanners

- Scheduling backups

Most infrastructure deployment automation technology that existed before Kubernetes uses a procedural approach towards deployment configuration steps. This approach is known as imperative. Examples of such configuration automation tools are Ansible, Chef, and Puppet.

An early and important decision during Kubernetes’ inception was the adoption of a declarative model. A declarative approach eliminates the need to define steps for the desired outcome. Instead, the final desired state is what is declared or defined. The Kubernetes platform automatically adjusts the configuration to reach and maintain the desired state. The declarative approach saves a lot of time as it abstracts the complex steps. The focus shifts from the ‘how’ to the ‘what.’

2. Managing the Lifecycle

Docker is just one example of a container runtime engine that packages your application and all its dependencies together for delivery to any runtime environment. Another example is containerd. Below are some of the more common events in the lifecycle of a container handled by a runtime engine.

- Pull an image from a registry, or Docker Hub

- Create a container based on an image

- Start one or more stopped containers

- Stop one or more running containers

One person can complete these tasks when managing a small number of containers on a few hosts. However, attempting to carry this out manually falls far short in an enterprise environment with hundreds of nodes and thousands of containers.

Kubernetes allows you to simply “declare” what you want to accomplish rather than code the intermediate steps. By using a container orchestration platform, you achieve the following benefits:

- Scaling your applications and infrastructure easily

- Service discovery and container networking

- Improved governance and security controls

- Container health monitoring

- Load balancing of containers evenly among hosts

- Optimal resource allocation

- Container lifecycle management

3. Configuring Network

One of the most complex and least appreciated aspects of managing multiple containers is the network configuration. This step is required so the containers can communicate with each other and with other networks beyond the cluster. What complicates this process is that the containers rapidly start and terminate, and one mistake could lead to a security exposure. In the absence of automation tools, teams must configure networking identity for all applications and load balancing components and set up security features for ingress and egress of traffic.

With Kubernetes, software teams declare the desired state of networking for the application before being deployed. Kubernetes then maps a single IP address to a Pod (the smallest unit of container aggregation and management) that hosts multiple containers. This approach aligns the network identity with the application identity, simplifying the complexity required of the container networking layer and enabling easier maintenance at scale.

4. Scaling

Scaling the infrastructure to match the application workload requirement has always been a challenge.

Suppose you are managing a few microservices running on a single server, and you are responsible for deploying, scaling, and securing these applications. Management may not be too difficult, assuming they’re all similarly developed (same language, same OS). But what if you need to scale to a thousand deployments of different types, moving between local servers and the cloud? The challenges in such a scenario are:

- Identifying containers that are under or over-allocated

- Knowing whether your applications are appropriately load-balanced across multiple servers

- Knowing whether your resource cluster contains enough nodes for peak usage times

- Rolling back or updating all applications

- Modifying all deployments through a centralized portal or CLI

- Enforcing your security standards across all infrastructure

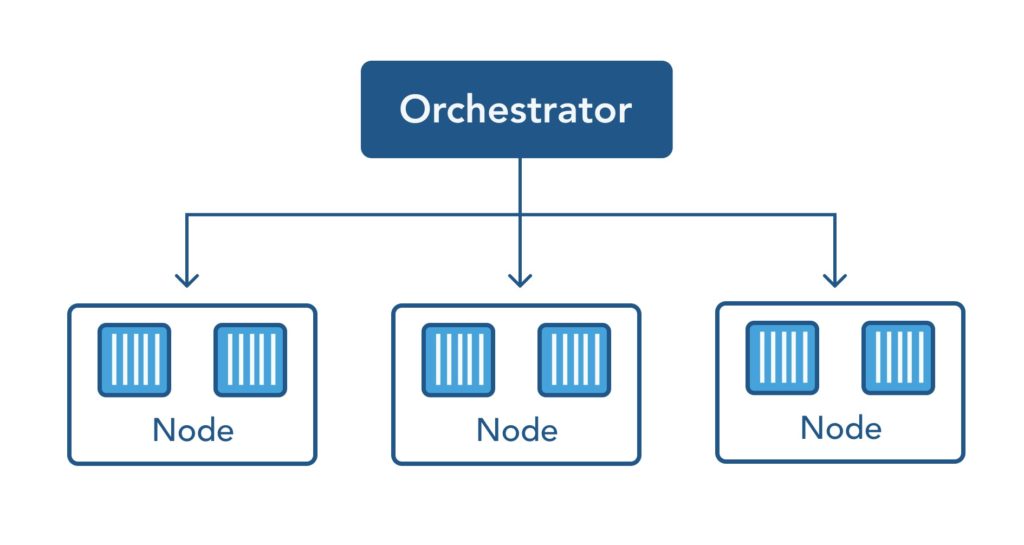

Kubernetes automates the workflows required to provide these types of functionality. Kubernetes organizes the hardware nodes as clusters and the containers as pods. You configure your desired state in a declarative fashion, and it takes over its administration to achieve your desired state of availability, performance, and security.

Container Orchestration

Container runtime engines such as Docker provide an OS virtualization platform to operate containers on a physical server or a virtual machine; however, they don’t handle administrative or orchestration tasks. Instead, the runtime engines rely on Kubernetes to take on the orchestration responsibilities. Container orchestration automates the scheduling, deployment, networking, scaling, health monitoring, and container management.

Here is a summarized list of the functionality provided by Kubernetes:

| Service discovery and load balancing | Identifies containers and balances traffic across them |

| Storage orchestration | Automatically mounts storage of your choice |

| Automated rollouts and rollbacks | Launches, stops, or re-assigns containers as needed |

| Automatic bin packing | Provisions desired container CPU and memory |

| Self-healing | Restarts failed or unhealthy containers |

| Secret and configuration management | Stores sensitive passwords and security keys |

Conclusion

Managing containerized applications in a large-scale production environment presents DevOps teams with multiple tasks related to optimal deployment methods, networking configurations, security management, and scalability, to name a few. Automation and orchestration are required to manage hundreds or thousands of containers. Kubernetes offers functionality such as self-healing, automated rollouts/rollbacks, container lifecycle management, a declarative deployment model, and rich scaling capabilities for both nodes and containers.

This quote from Spotify summarizes well the benefits of Kubernetes: “Our internal teams have less of a need to focus on manual capacity provisioning and more time to focus on delivering features for Spotify.”

You like our article?

You like our article?

Follow our monthly hybrid cloud digest on LinkedIn to receive more free educational content like this.

Consolidated IT Operations for Hybrid Cloud

Learn MoreA single platform for on-premises and multi-cloud environments

Automated event correlation powered by machine learning

Visual workflow builder for automated problem resolution